参考

1 | https://github.com/yaooqinn/spark-authorizer |

部署Jar

将下面的包添加到$SPARK_HOME/jar中,切记千万要注意ranger-jars版本,最好是按照下图中的版本:

1 | eclipselink-2.5.2.jar |

部署配置文件

将/etc/hive/2.6.5.0-292/0/conf.server中的ranger相关的配置文件拷贝到 SPARK_HOME/conf中,可以执行:find / -name xxx.xml 来找到;

1

2

3

4ranger-hive-audit.xml

ranger-hive-security.xml

ranger-policymgr-ssl.xml

ranger-security.xml

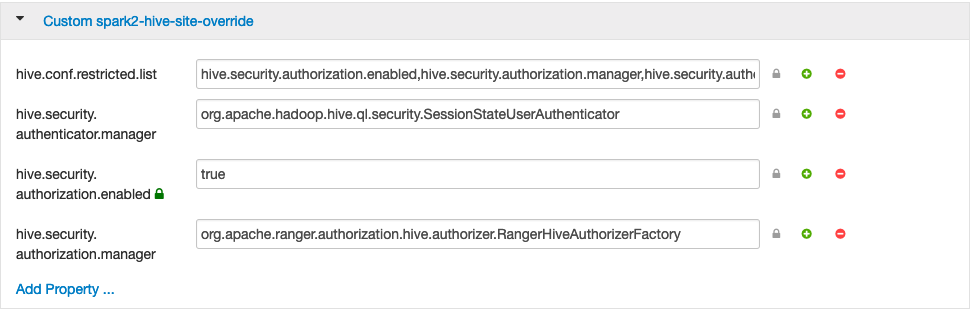

并在ambari界面:spark配置中心:hive-site.xml中添加以下配置:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.ranger.authorization.hive.authorizer.RangerHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.security.authenticator.manager</name>

<value>org.apache.hadoop.hive.ql.security.SessionStateUserAuthenticator</value>

</property>

<property>

<name>hive.conf.restricted.list</name>

<value>hive.security.authorization.enabled,hive.security.authorization.manager,hive.security.authenticator.manager</value>

</property>

spark-default.xml添加以下配置:

1

spark.sql.extensions=org.apache.ranger.authorization.spark.authorizer.RangerSparkSQLExtension

spark job中的配置

需要在resource中添加:

1 | hive-site.xml |

需要在程序中指定配置:

1 | SparkConf sparkConf = new SparkConf(); |